Soft Realtime Performance of Rclpy

Using ROS2 Python Bindings for Soft-Real Time Applications on Raspberry Pi

We found that the ROS2 Eloquent Python bindings on a Raspberry Pi should only be used when real-time is not required or the used nodes communicate only few data over the ROS2 system. The design for modularity significantly helps in flexibly deploying our robotics system on different targets.

Introduction

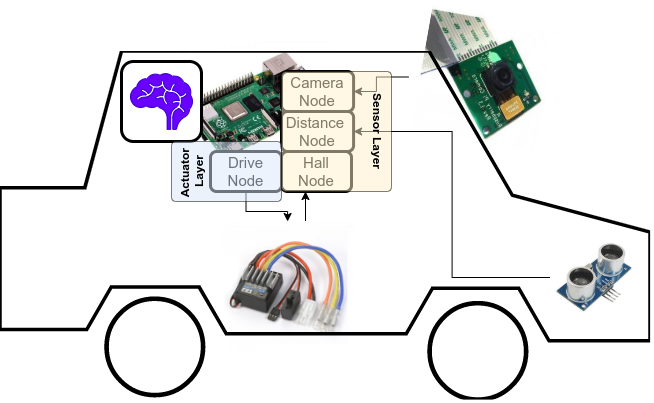

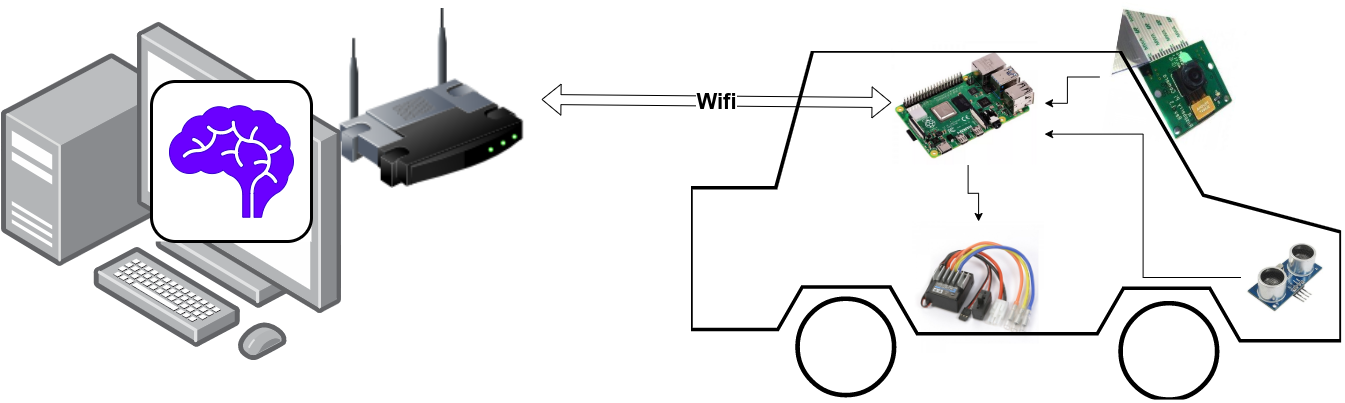

We currently develop a demonstrator about connected cars, which drive in a convoi. A fundamental building block of the demonstrator is a model car which drives autonomously on a track. The model car uses standard RC car ESC for motor control and servos for steering. On the sensor side, the car is equipped with a wide-angle camera and ultrasonic distance sensor pointing to the front, a reflectance sensor to detect the line below the car and a motor encoder (Hall sensor) to measure the car's speed. As the computing unit, the car is equipped with a RaspberryPi 4 together with an Emlid Navio2 Navigation board. The entire model is programmed with the ROS2 Eloquent framework. Moreover we required, that we can easily test our control setup in a realistic computer simulation.

Given the philosophy of ROS2, we decided to split up the processing chain into several modules:

- The sensor layer publishes measurements from distance sensor, reflectance sensor, camera sensor and hall sensor as appropriate ROS2 messages.

- The actuator layer, namely the drive node, translates ROS2 messages for drive commands into the appropriate electrical commands for the ESC and steering servo.

- The vehicle's brain subscribes to the sensor values. It performs elaborate calculations like image processing to detect the lane and performs throttle control to control the distance to the front car. Eventually, the brain publishes the drive commands towards the actuator nodes.

The sensor and actuator nodes were entitled the ultra thin layers because they merely translate from electrical signals into ROS2 messages without any further processing. Using this setup, we could easily reuse the brain to run it in a simulation, where we only had to rewrite the ultra-thin layers in the simulator.

However, even though the cars drove well in the simulator when run on our development PCs, in reality the cars sporadically would leave the track or crash against the wall.

Analysis

Since the steering of the vehicles was solely controlled by the camera sensor and image processing we first thought that the image processing algorithm would be wrong and guide the vehicle off the track. Accordingly, we dumped data from the real track and analyzed the algorithm offline.

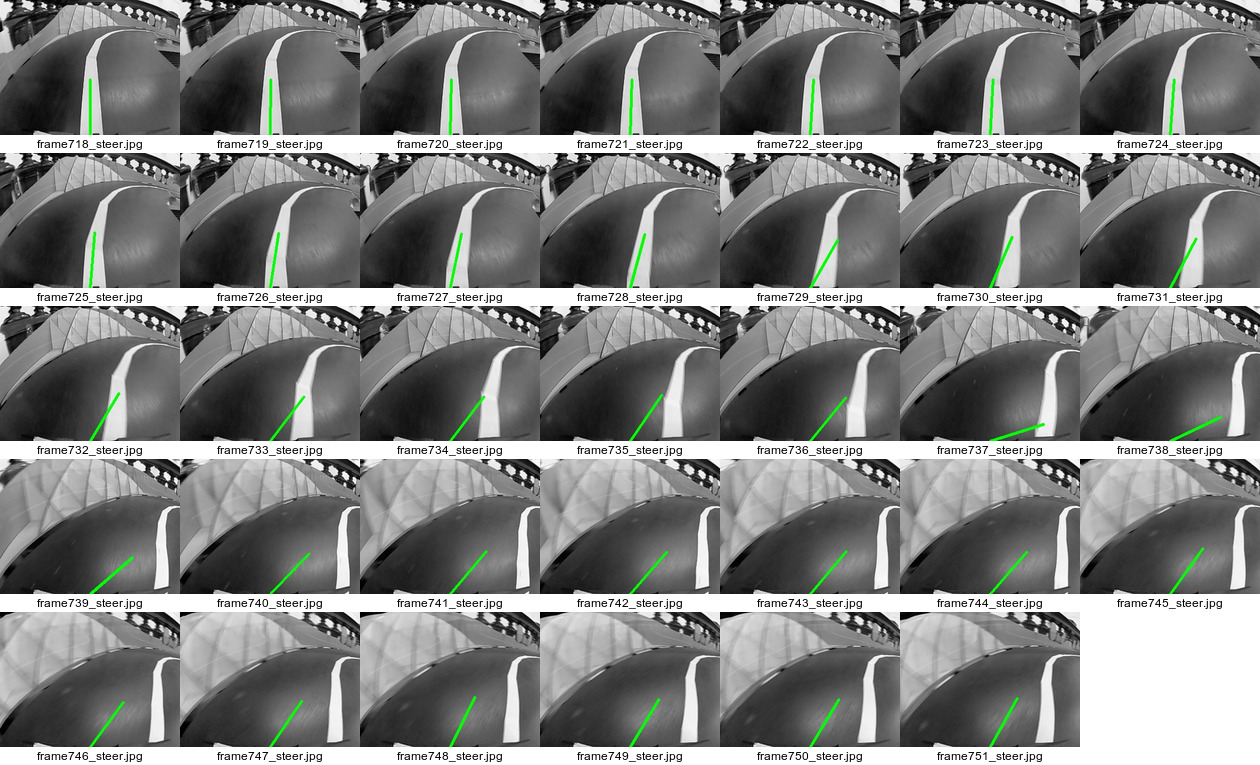

As it turned out, despite the image processing working reliable after some tweaks, the cars would sporadically leave the track. A further analysis revealed that in an examplary critical situation the car's brain indeed commanded to steer correctly, but the steering was performed with quite some delay:

What is visible in above picture is that the brain indeed says "drive right!", but the car does not react. The time between first command to steer right (frame727) and the car actually turning (frame739 or 740) is 12 or 13 frames, meaning 0.25s of delay. Aforementioned frame numbers were subjectively obtained from the measurements. The frame where the steering command was started was chosen to be the frame where the green line (the calculated steering angle) starts really pointing to the right (around frames 726-728). The frame where the actual steering is performed is identified as the frame where the lane stops moving closer to the right border of the image (frame 740).

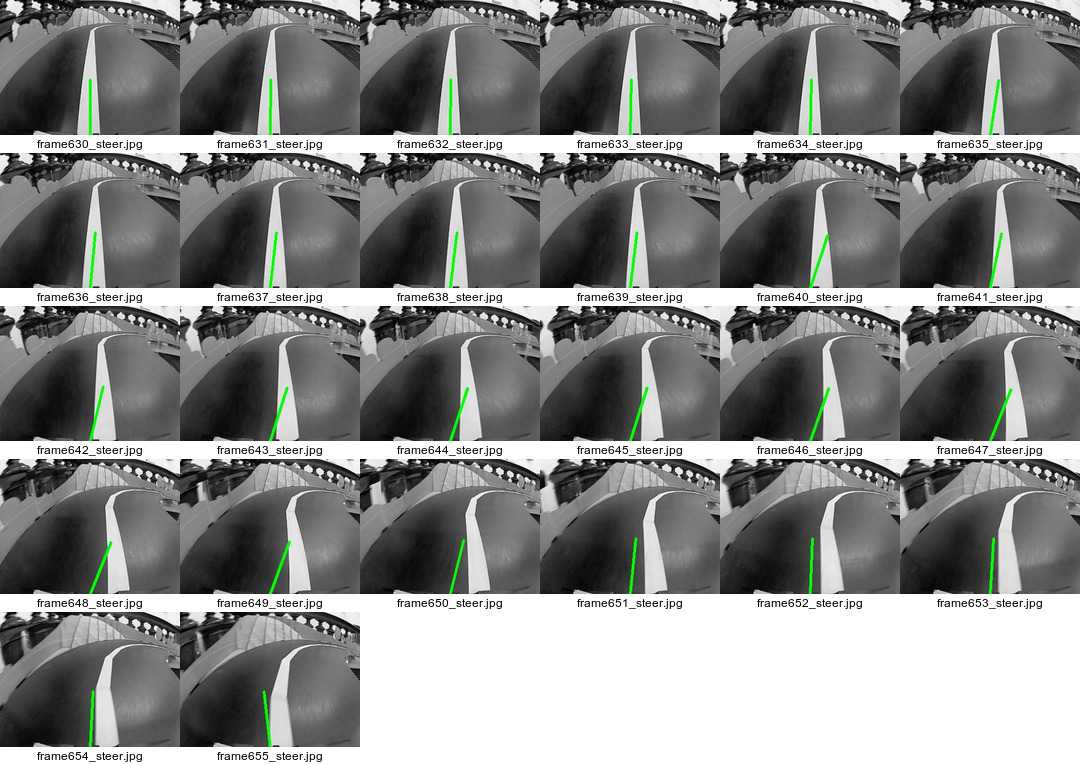

A similar situation was observed in this record:

By some situation, the car gets a tendency to the left in frame639. The controller immediately steers to the right, but the car reacts only in frame650, again a delay of 0.2s. Apparently either within the brain there was a delay between the steering calculation and the publishing of the message, or the drive node received messages too late.

In addition to these findings, we figured out that within the brain the update loop for calculating the next steering angles were also delayed. The update loop in the brain was implemented by a ROS2 timer similar to:

class Brain(Node):

def __init__(self):

# ...

self.create_timer(1/50, self._update)

# ...

def _update(self):

# perform steering calculation and send it to the drive node.

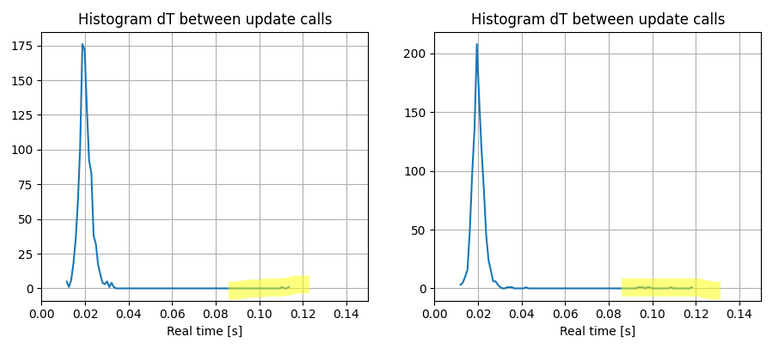

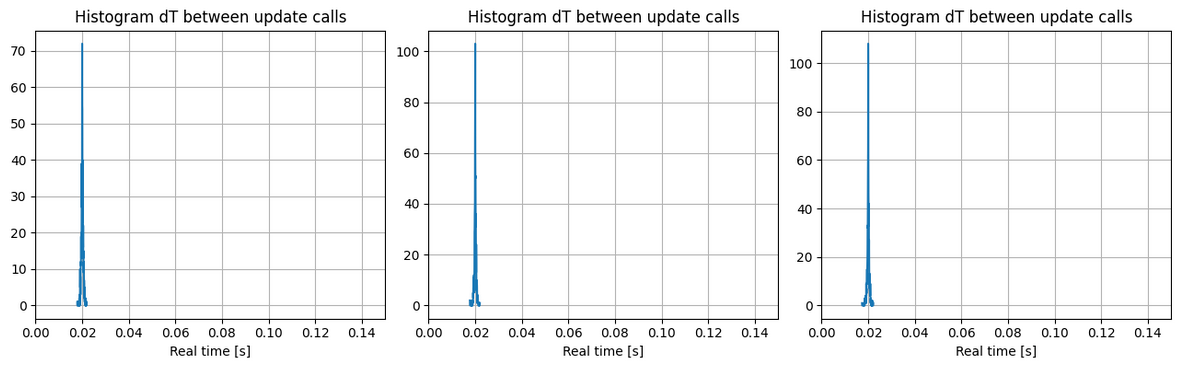

self._publishSteeringAngle()The figure below, measured on the RaspberryPi, shows the histogram of time-delays between subsequent update calls for two independent measurements.

The control loop runs at 50Hz, hence we expect a loop time of 0.02s. However, as marked in yellow, there are spurios values at delays as high as 0.1s. This means that sometimes the update loop is called only every 100ms and hence the reaction time would be at least that slow. Additionally, we observed that also measurements from the ultra thin layers were received with quite some delay of also up to 200ms. Clearly, this was not a tolerable state.

Solutions

Given these findings, we tackled the problem as follows:

- Let ROS2 log messages to files on a RAM disk instead onto the SD card. We chose this solution, because we additionally observed that writing to the RPi SD card can block the entire processing once a decent amount of data is written. Moreover, we did not want to wear out our SD cards too qickly.

- Reimplement the ultra thin layers as ROS2 nodes in C++. The motivation was to overcome the garbage collection phases of Python, which we thought to produce these sporadic delays. Moreover, we saw that the CPU usage of the ROS2 Python Nodes was quite high. Reimplementing in C++ was not too laborious, because these nodes had only very limited functionality.

- Move the brain from the Raspberry Pi to a more powerful computer. We went for this solution, because rewriting the brain in C++ would have only been a last resort, due to the complexity of the contained algorithms. Here, Python really shines in being able to write flexible code very quickly.

Solution one was a no-brainer and quickly implemented by the aid of several online resources

The implementation of the ultra thin layers as C++ nodes was accomplished quickly due to the simplicity of the programs. In short, we migrated below Python code to a C++ equivalent.

class UltraThinLayerSensor(Node):

def __init__(self):

self.create_timer(1/50, self._publishMeasurement)

def _publishMeasurement(self):

# ask hardware for new data

# publish it to ROS2We could not directly evaluate the latency improvement, but it was easy to compare the CPU usage of each sensor/actuator node on the Raspberry Pi:

| DriveNode | CameraNode | HallSensorNode | |

|---|---|---|---|

| Python | 13.9% | 50% | 10% |

| C++ | 4% | 22% | 2% |

Apparently, the CPU usage decreased significantly, which let us hope that strong delays would be at least more rare.

In addition, we connected the Raspberry Pi via Wifi to a stronger PC (Intel i7-9600 with 32GB RAM) and let the brain run on that device. Here, the benefit of a modular design payed off once again, because it was no issue at all to move the brain to another device, because ROS2 takes care of discovery and transport of data between the modules.

When the brain was running on that powerful machine, we observed a significantly better driving performance. There were no sporadic failures anymore, and we could even let the cars drive faster compared to the RaspberryPi-only solution. To quantify the improvement, we again performed the measurement of the update loop, and obtained these histograms (results of three independent measurements):

Clearly, the update loop runs very accurate and there is no jitter. Apparently, our Raspberry Pi was just too slow for the task at hand. On the downside, we needed to stream the camera images and drive commands over Wifi, which brought its own complications, like lost packets or dropped connections. We could overcome this problem with a high-quality Wifi access point. It remains to be evaluated how such setup behaves in a situation where many Wifi networks interfer each other like in a public fair.

Conclusions

Throughout our work, we have learned that on the Raspberry Pi ROS2 nodes can be written in Python only when there are no timing constraints. Moreover, we experienced that in particular the ROS2 Eloquent rclpy layer imposes quite some CPU and latency overhead compared to a raw C++ version. However, this might be different with more recent ROS2 versions.

We experienced first-handed the benefits of a modular system design. Only due to the modularization of our system we could quickly move the brain to another PC without needing to rewrite any code.